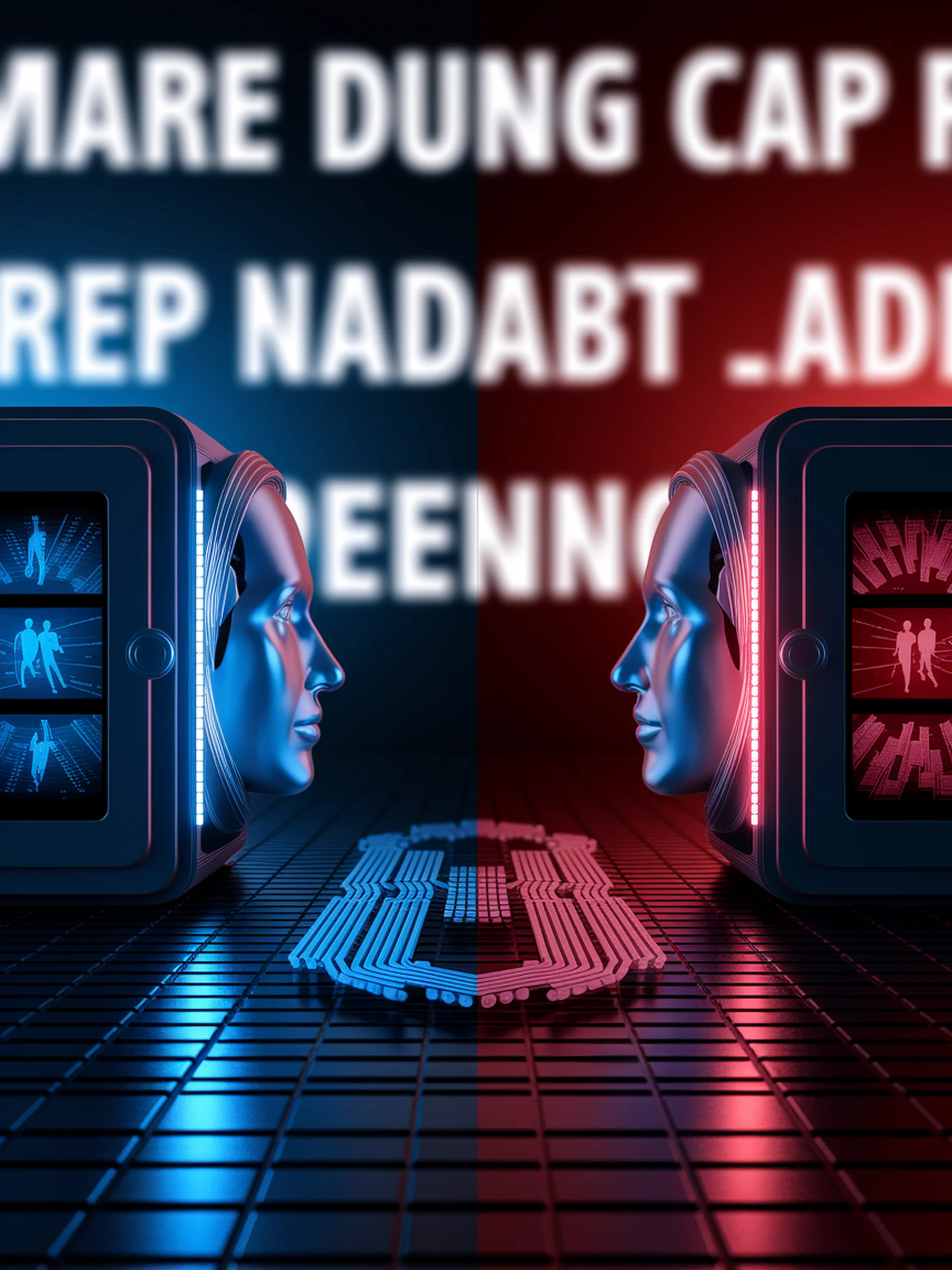

Ideology in AI: Uncovering LLM Biases

How language models reflect their creators' political perspectives

This research examines how large language models encode and reproduce the ideological perspectives of their creators through systematic analysis across multiple languages.

- Political spectrum alignment varies significantly between models from different creators

- LLMs show consistent ideological patterns even across different languages

- Systems exhibit identifiable political leanings that match their developers' known positions

- Models demonstrate persistent biases that influence how they present information to users

For security professionals and policymakers, this research highlights critical concerns about information manipulation potential and the need for transparent AI governance frameworks to address embedded ideological biases in widely-used AI systems.

Large Language Models Reflect the Ideology of their Creators