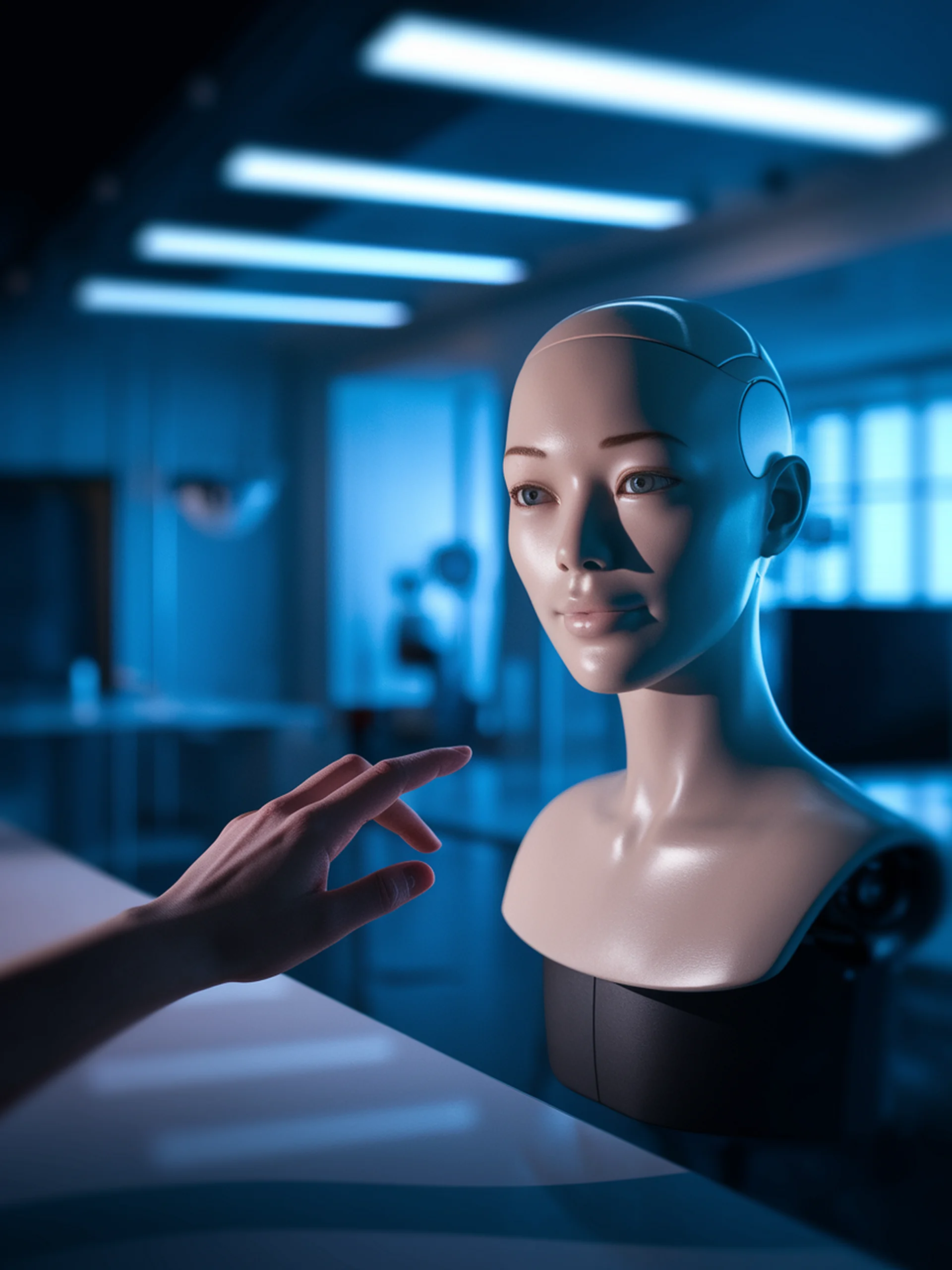

The Hidden Dangers of 'Humanized' AI

How LLM chatbots with human characteristics may enable manipulation

This research examines how large language model chatbots mimicking human characteristics create serious security and trust concerns.

- Personification risk: AI chatbots adopting human faces, names, and personalities may create false intimacy with users

- Regulatory focus: The EU AI Act attempts to address these manipulation risks but may have implementation challenges

- Security implications: Human-like AI may exploit psychological vulnerabilities by creating illusions of relationship and understanding

- Trust paradox: Features designed to increase user trust may simultaneously enable more effective manipulation

This research matters for security professionals as it highlights how seemingly benign design choices in AI systems can create novel manipulation vectors requiring specific regulatory and design safeguards.

Manipulation and the AI Act: Large Language Model Chatbots and the Danger of Mirrors