Securing Federated LLMs

A Novel Framework for Enhanced Robustness Against Adversarial Attacks

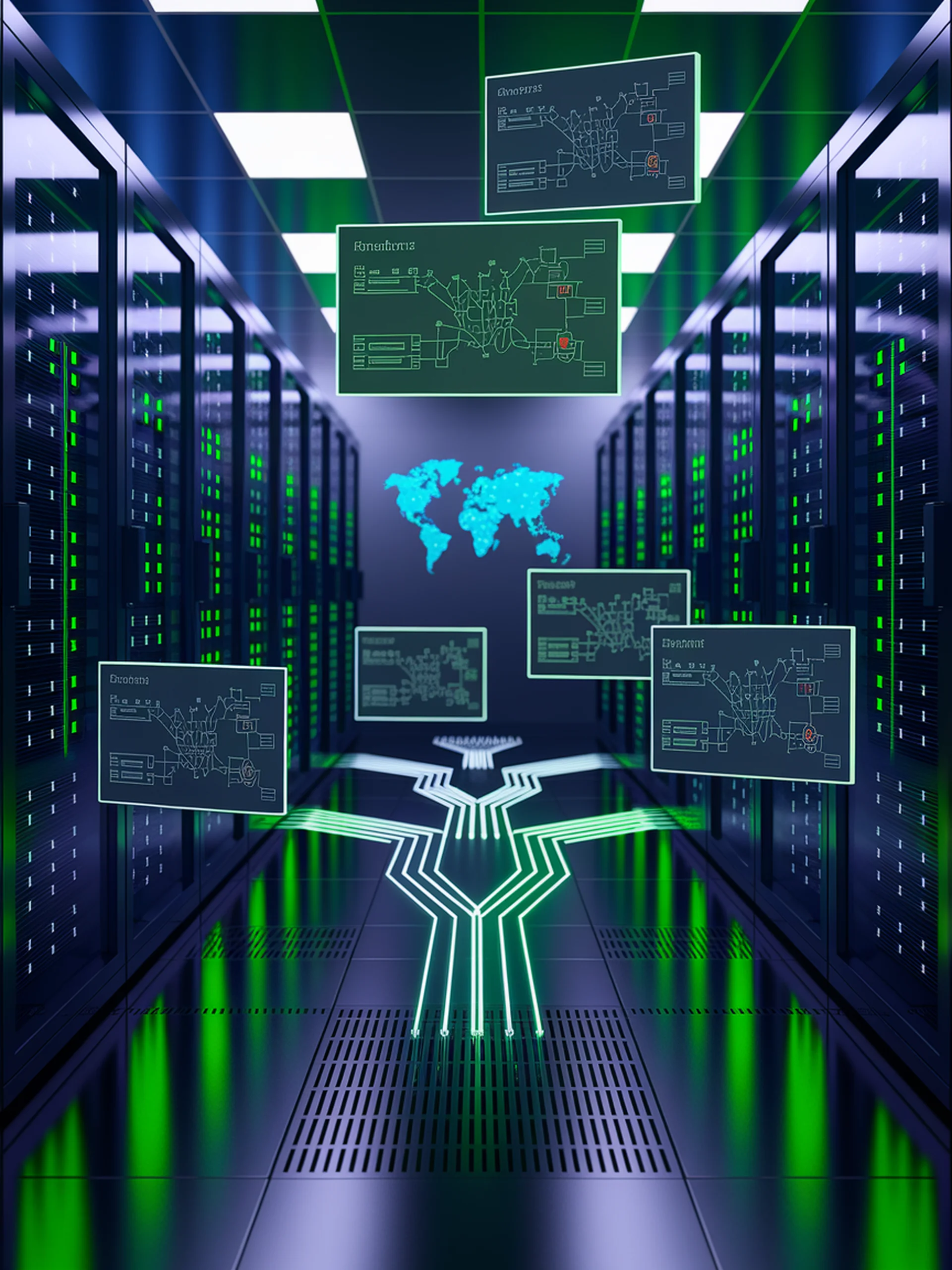

FedEAT introduces a comprehensive framework that enhances security for federated large language models by optimizing robustness against malicious clients and attacks while preserving privacy.

Key Innovations:

- Combines Federated Learning with LLMs to leverage distributed data while maintaining privacy protection

- Addresses core security vulnerabilities in distributed LLM training environments

- Provides a solution for computational cost challenges in LLM deployment

- Especially valuable for sensitive domains where data privacy is paramount

This research matters for security professionals as it offers a pathway to deploy powerful language models in privacy-sensitive environments while maintaining robust defense against adversarial attacks and malicious participants.

FedEAT: A Robustness Optimization Framework for Federated LLMs