Teleporting Security Across Language Models

Zero-shot mitigation of Trojans in LLMs without model-specific alignment data

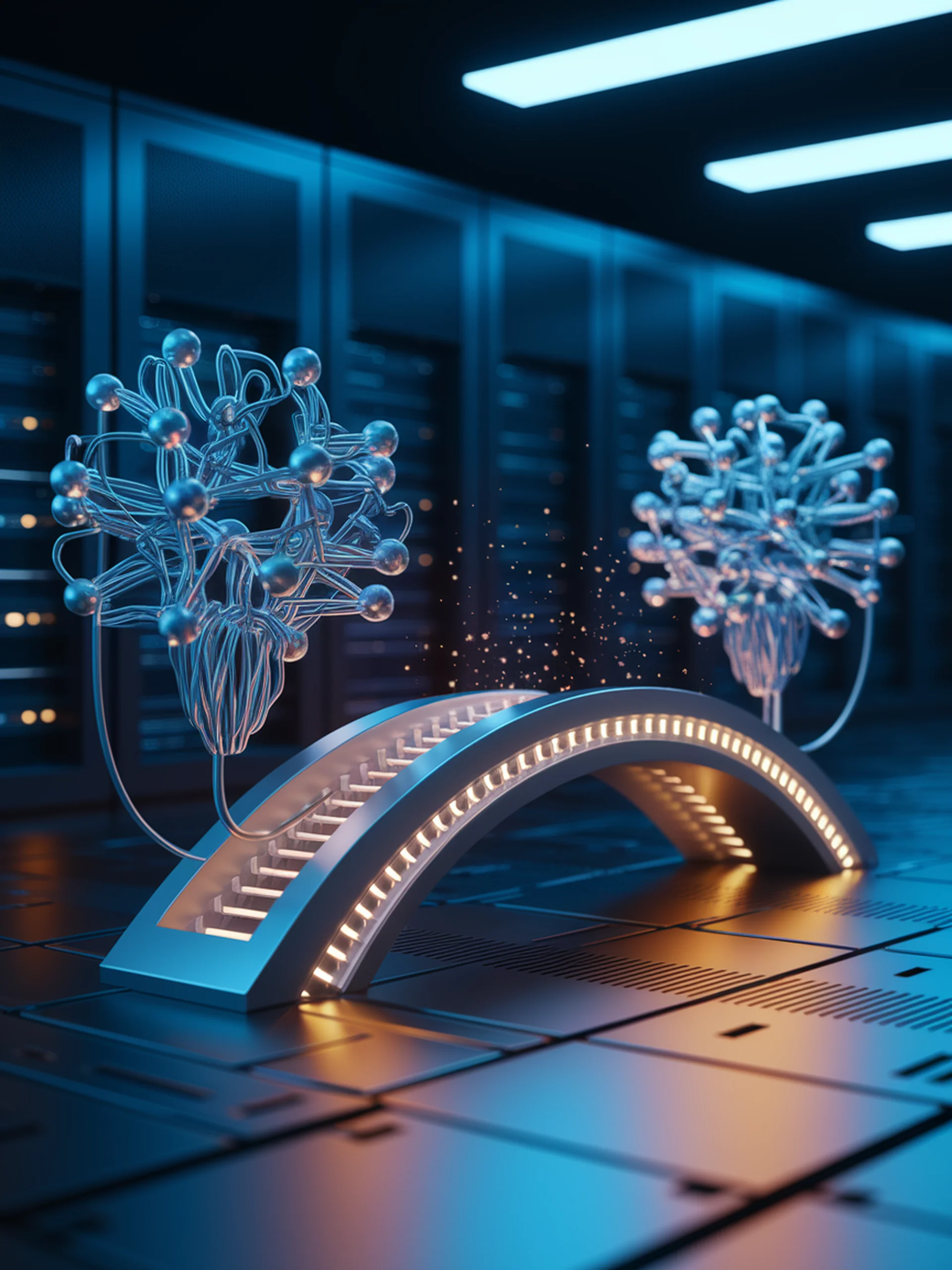

TeleLoRA introduces a breakthrough approach to transfer security alignment between different Large Language Models, eliminating the need for model-specific training data to remove Trojans.

- Creates a unified generator of LoRA adapter weights that can be applied to unseen models

- Enables zero-shot Trojan mitigation by leveraging knowledge from previously aligned LLMs

- Demonstrates effectiveness across multiple model architectures without requiring new training data

- Provides a scalable solution to security vulnerabilities in an expanding LLM ecosystem

This research significantly advances LLM security by allowing organizations to protect new models from malicious triggers without collecting and labeling model-specific alignment datasets for each deployment.