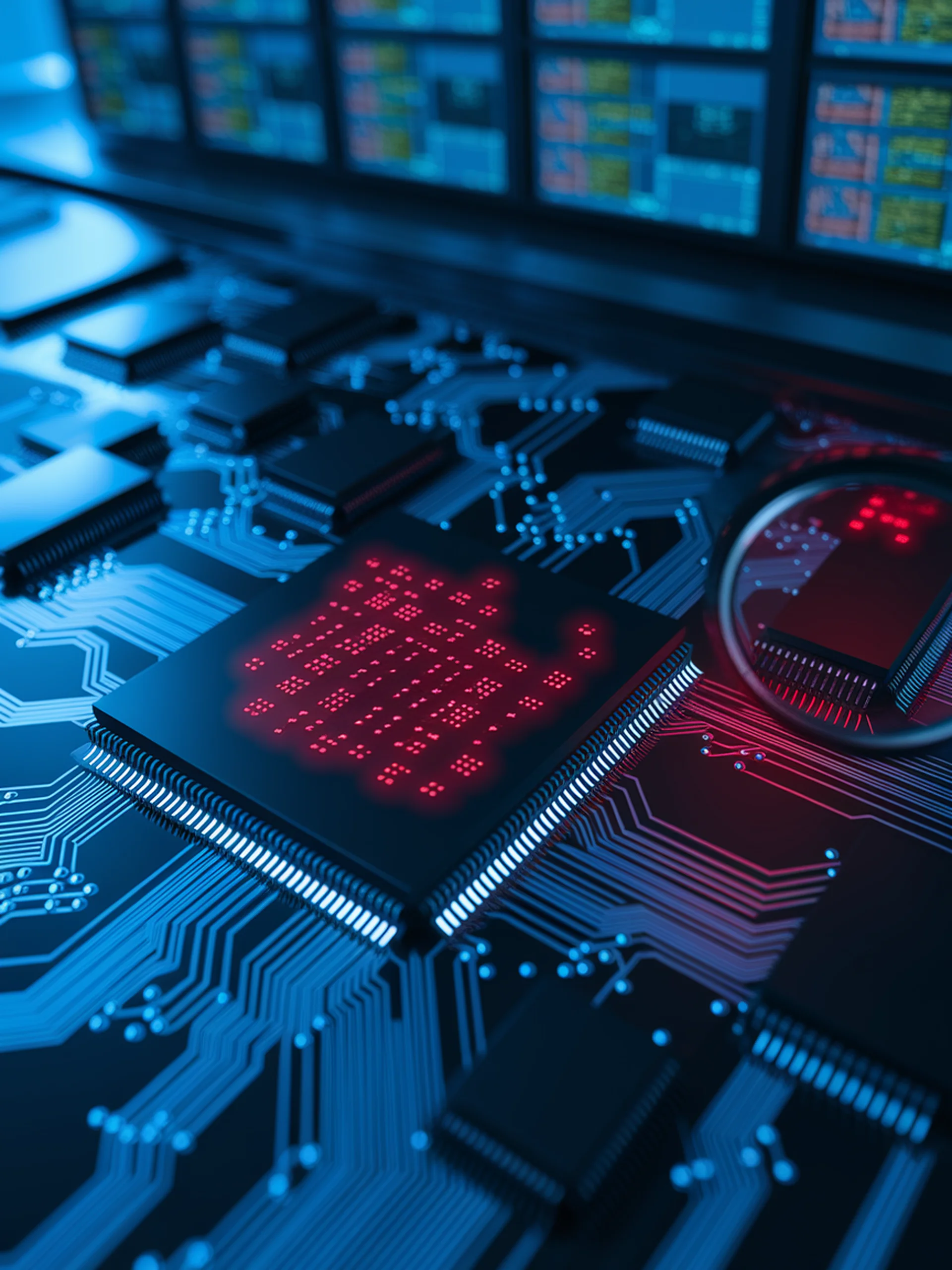

Backdoor Vulnerabilities in AI Vision Systems

Detecting poisoned samples in CLIP models with 98% accuracy

This research reveals critical security vulnerabilities in CLIP (Contrastive Language-Image Pretraining) models and introduces a novel detection method to identify backdoor attacks.

- CLIP models are vulnerable to poisoning attacks with just 0.01% contaminated training data

- Researchers identified unique patterns in poisoned samples' representations

- Their detection framework achieves 98% accuracy in identifying backdoor samples

- The work highlights serious security concerns for large-scale AI models trained on unscreened web data

Why it matters: As organizations deploy more vision-language models trained on public data, these backdoor vulnerabilities could be exploited by adversaries to manipulate model behavior in targeted ways.

Detecting Backdoor Samples in Contrastive Language Image Pretraining