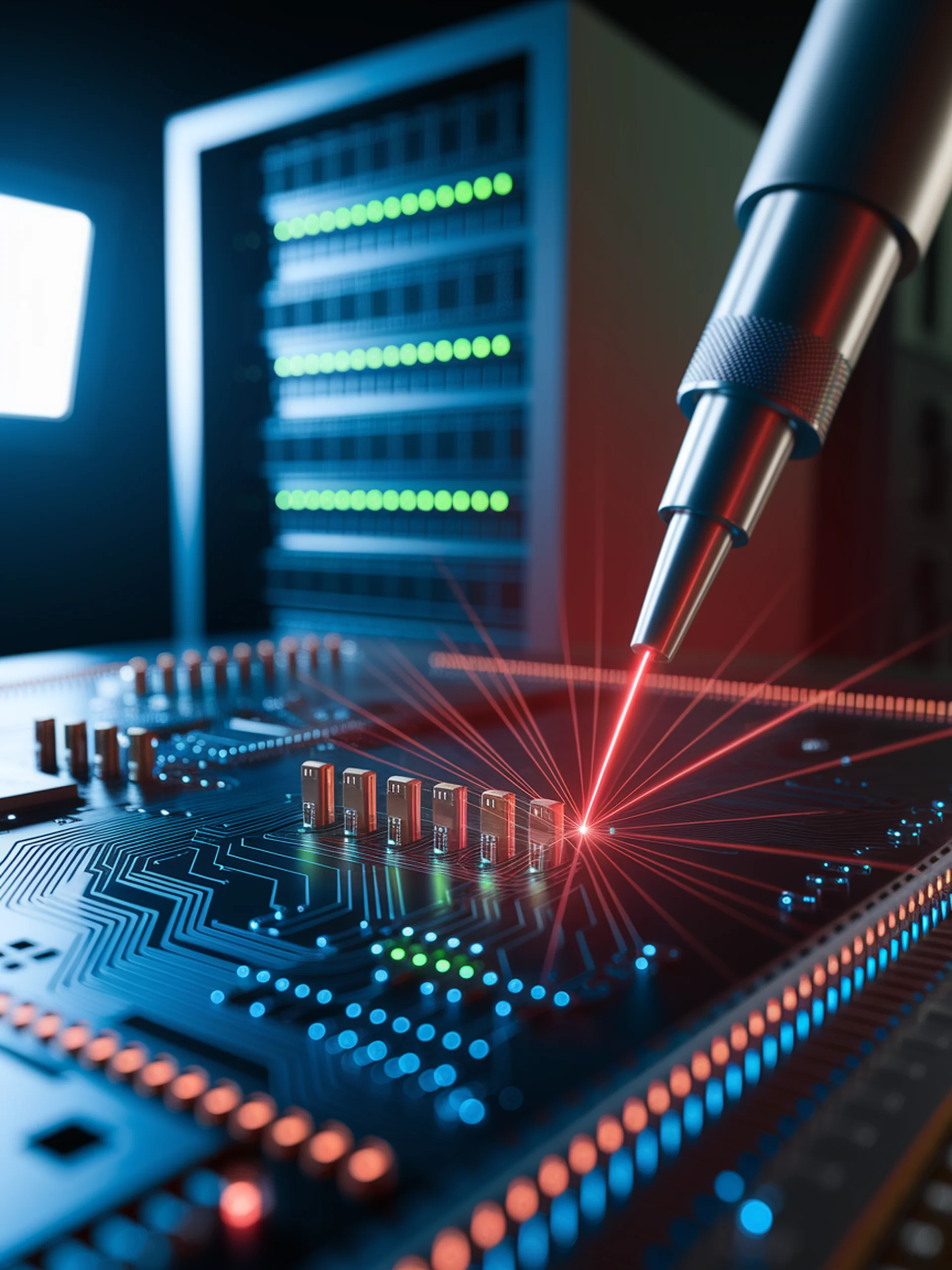

Targeted Bit-Flip Attacks on LLMs

How evolutionary optimization can compromise model security with minimal effort

This research introduces GenBFA, an evolutionary approach that identifies crucial parameters for bit-flip attacks on Large Language Models, demonstrating how minimal targeted changes can cause catastrophic failures.

- Only 5-20 bit-flips can degrade LLM performance by up to 70% on benchmarks

- The AttentionBreaker framework effectively targets attention mechanisms, a critical vulnerability

- Evolutionary optimization efficiently navigates billions of parameters to find optimal attack points

- Attacks work across various model architectures (Llama, GPT, etc.) with minimal computational resources

Why it matters: As LLMs become integrated into critical systems, these hardware-level vulnerabilities represent significant security risks that standard software protections cannot address. Organizations deploying LLMs need hardware-level security measures to protect against such attacks.

GenBFA: An Evolutionary Optimization Approach to Bit-Flip Attacks on LLMs